Amid debate about AI chatbots’ role in the classroom, Professor Mark Rosen incorporated AI into an assignment to experiment with chatbots’ educational potential.

The Office of Community Standards and Conduct has stated that “the use of ChatGPT or any similar AI programs” is considered plagiarism, and machine generated language detectors are already in the works. But what will the proliferation of machine generated work actually look like in UTD classrooms?

Mark Rosen, an associate professor of visual and performing arts, tried to incorporate ChatGPT in a recent “Understanding Art” assignment. Students first had to write a 700-900 word visual analysis of Louis Bourgeois’ bronze spider sculpture, “Maman,”in Crystal Bridges, Arkansas. The second part, however, asked students to work with an AI response generated from roughly the same prompt.

“I’ve been involved in pedagogical questions about this, just with my colleagues, but I came up with my own trial balloon,” Rosen said.

Students were not forced to improve the essay but were instead encouraged to read the bot’s work and give their reactions.

“[The AI response is] the baseline aggregate internet response to this question,” Rosen said.

He describes the response as a computer word salad that had a couple of factual errors, and that a lot of the writing was just “filling up space with descriptions of other works at the museum.”

Nonetheless, it wouldn’t have raised too many eyebrows.

“If I assumed [what they turned in] was their work and had no reason to doubt, we’d definitely give them a passing grade,” Rosen said. “It’s unfortunately true … and gonna lead to a very different type of assignment structure.”

The Center for Teaching and Learning hosted a workshop in early February to explore ChatGPT and address some of the concerns Rosen raised from his first experiment with the technology. The workshop also revealed the drastic changes students and educators will have to make in the near future.

Carol Lanham, professor of instruction in sociology, said that the workshop discussed three approaches that have emerged since these applications have been available to students.

“Detection” was one framework that used programs like turnitin and GPTZero.A Jan. 13 article from turnitin.com showed that their software could detect text generated by ChatGPT but also tell when someone edited the response to sound more human.

“We run it through this tool, and we see what percentage it is,” Lanham said.

The second approach could be “prevention,” which involves banning the app on university servers, having a Respondus lockdown browser so that students can only access their test or going back to basic pen and paper assessments.

“Another example would be to create prompts that are less likely to be found,” Lanham said.

Lanham said that educators could also take advantage of the fact that ChatGPT only has access to information up to 2021.

Finally, educators could consider a framework termed “innovation.”

“[We] really made the point that trying to stop the use of it would be like in the olden days, stopping the printing press, stopping the laptop and stopping the internet,” Lanham said.

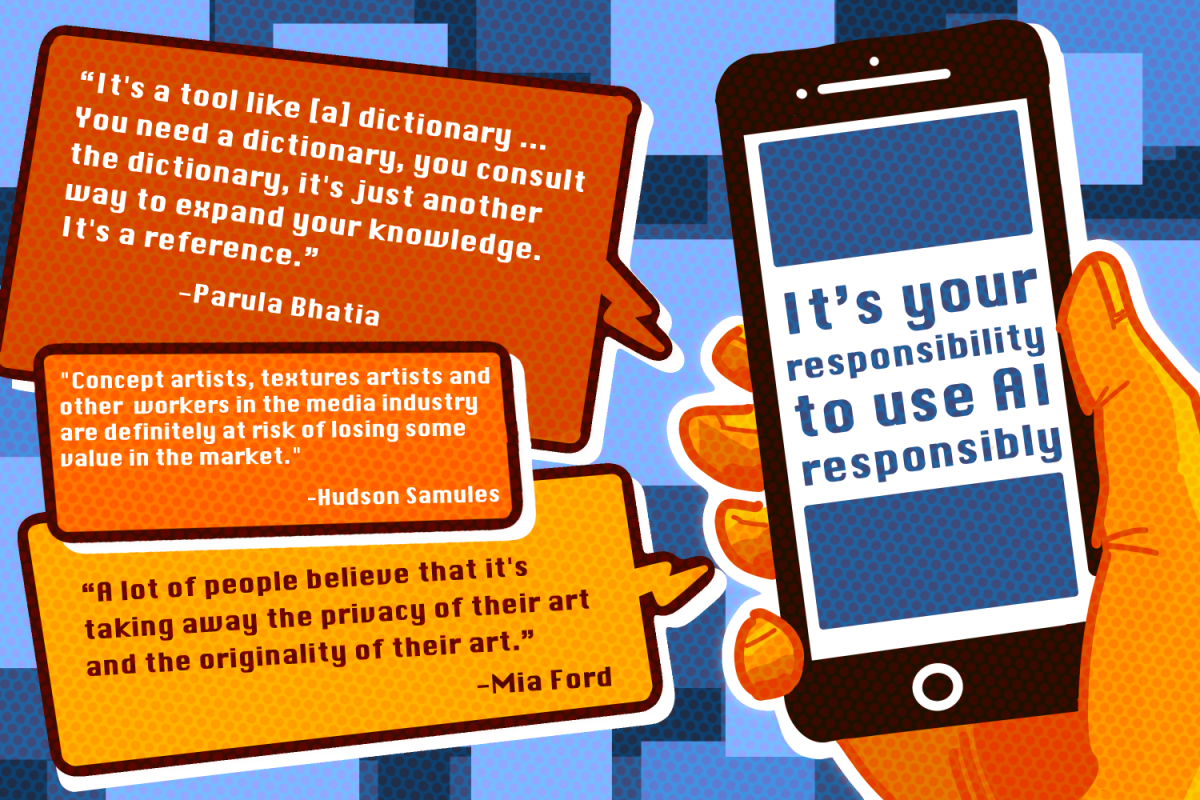

In a statement to The Mercury, the Writing Center said that ChatGPT is useful for paraphrasing and researching certain topics and that “overall, AI tools should have a positive impact for students as long as they are used ethically.”

Rosen’s art assignment fits this last category, allowing the creation of drafts and assignments by combining the efforts of humans and AI.

“There’s a lot of curiosity and interest, clearly, and it just makes sense that if we continue the conversation, we can come up with good strategies together,” Lanham said.

Rosen, however, said he wanted to understand how his students were feeling about AI on a deeper level.

“You have somebody else fulfilling your response for you now,” Rosen said. “What’s your personal take on it?”

In some ways, Rosen said he saw ChatGPT’s shortcomings as a motivator to anyone who fears that AI will take their job or make them obsolete.

“You should do better than this, and you should be more personal than this,” Rosen said.