Aiyana Newcomb | Mercury Staff

Artificial intelligence, having evolved rapidly from answering simple questions to generating lifelike videos, has become impossible to ignore. The earliest version of ChatGPT was released by OpenAI in June 2018; the latest milestone, DALL-E 3, released in Oct 2023, can now create detailed and realistic images including faces and intricate text. Among UTD’s students and faculty are staunch supporters of AI use for creative or educational projects as well as skeptics.

UTD embraces the use of AI, allowing its use to its students and its professors to “automate or augment basic tasks, reinvent workflow, enhance worker performance, and accelerate research” and even provides a prompt guide for Microsoft Copilot to staff and faculty. How students can use AI is left up to the professors, whose responses range from banning technology in the classroom to projects incorporating AI use.

On Aug. 30, ATEC graduate student Parul Bhatia participated in an AI art project titled “Black_GPT, an Afrofuturism Midjourney” which focused on African cultures. The project was a collection of different showcases led by graduate students and associate professor of arts and technology Andrew F. Scott, consisting completely of AI-generated visuals and speech.

She said AI did most of the heavy lifting of her project — producing background music, singing her poem with an AI singing model and generating images of women dancing to accompany it. The AI assistance allowed her to focus on editing her project and bringing her vision to life with carefully worded prompts.

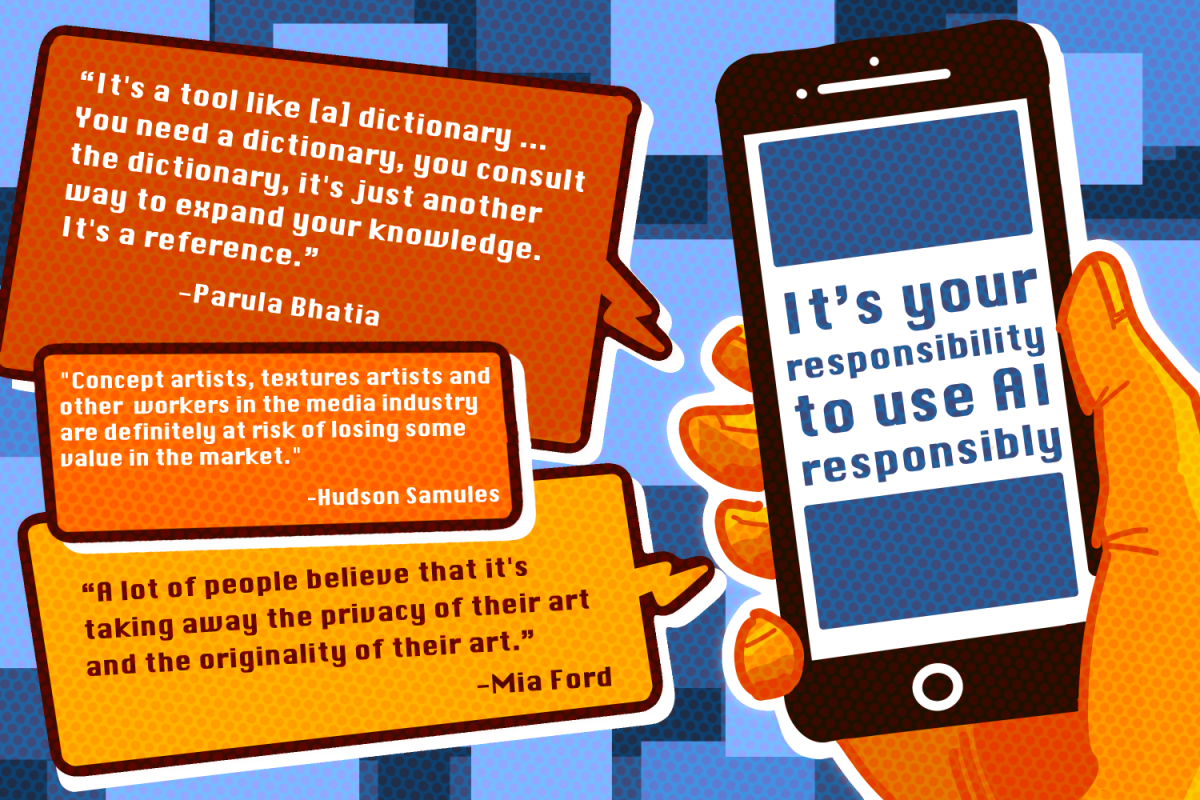

“It’s like a quasi human being who’s talking back to you,” Bhatia said. “I think it’s important to remember [AI] is a great tool. It’s a tool like [a] dictionary. At the end of the day you don’t remember all the words. You need a dictionary, you consult the dictionary, it’s just another way to expand your knowledge. It’s a reference.”

Some artists disagree with using AI for creative works and argue that AI-generated art often repurposes existing images. ATEC freshman Mia Ford said she has seen that writers and artists are heavily impacted, especially through loss of clientele and art theft, something she said her friends who do art commissions are incredibly unhappy with.

“AI just uses online images from other artists to generate its own images, which in a sense is plagiarism because it’s not crediting its references,” Ford said. “A lot of people believe that it’s taking away the privacy of their art and the originality of their art.”

Artists have opposed the progression of AI image generation, expressing their distaste through lawsuits and petitions. Some individuals in technology-focused industries or degrees, such as computer science freshman Evan Olson, disagree with the idea that AI fully copies art styles or intellectual property.

“I don’t know if you could call it copied as much as inspired, even though it’s using the same base,” Olson said. “I think people do the same thing. You look at an image, you analyze the colors, the shapes, the style. And then if you’re making something based on that style, you have to use that color, shape and whatever else.”

Computer science graduate student Hudson Samuels said AI-generated work is only plagiarism if students try passing it off as their own creation or if they use non-public domain media to generate the work. Samuels said work created by AI can not be copyrighted, protecting artists since AI work itself has less monetary value.

“There definitely a lot of bad actors abusing available media to steal from others, but we should be using this as a learning tool and not something to try and gain attention on social media,” Samules said. “But in the majority of cases, it definitely is a very scary reality. Concept artists, textures artists and other workers in the media industry are definitely at risk of losing some value in the market.”

With AI’s proliferation sparking ethical concerns, UTD’s Artificial Intelligence Society, created to demystify AI, has dived into the ins and outs of AI through projects such as creating their own AI models. Suvel Muttreja, cognitive science graduate student and director of a long-term AI project in AIS, AI 5, said his background in psychology incites his caution about AI use.

“I noticed that some of my computer science friends don’t have that [ethical concern] as much,” Muttreja said “They’re ‘progress over everything.’ But as a psychologist, I also have this concern [that] we’re using these technologies in a way that is exploitative of work that’s done by other humans. There’s people that have put blood, sweat and tears in the art that’s been created, writing that’s been done. Giving it to AI without permission to train off of and to imitate is definitely harmful.”

AI’s moral concerns have led to threats of data poisoning, where large amounts of data are changed or corrupted to alter the AI. The main threat to the data lies with hacking groups, as warned by the the National Institute of Standards and Technology, including ongoing data poisoning projects such as Glaze and Nightshade taking advantage of AI’s algorithmic nature.

“It doesn’t know what it’s talking about,” Muttreja said. “The best way to explain ChatGPT [is] it’s like a parrot repeating information back to you. You can train a parrot for ten years — when I say, ‘Are you hungry?’ you say, ‘Yes food.’ The parrot never knows what ‘yes food’ means. It just knows that it has to say these sounds in the correct way and it gets food that way. Similarly, ChatGPT doesn’t know what the words it’s saying means … it’s just doing math calculations on the probability of the next word it should be saying.”

Some share Muttreja’s and artists’ concerns for the ethics of AI, while others look towards the future of AI ready to blaze ahead. Olson said AI has the potential to be more important than electricity and that it has already had a huge impact, for better or worse.

“We’re entering a new era where the Internet will not be as useful as it was in the past 15 years,” Muttreja said. “Google was not going to be as useful as it was. We’re going to have to come back to using our own wits and our own senses to figure out how to extract relevant information from the world around us.”