AI models created to help train future healthcare professionals

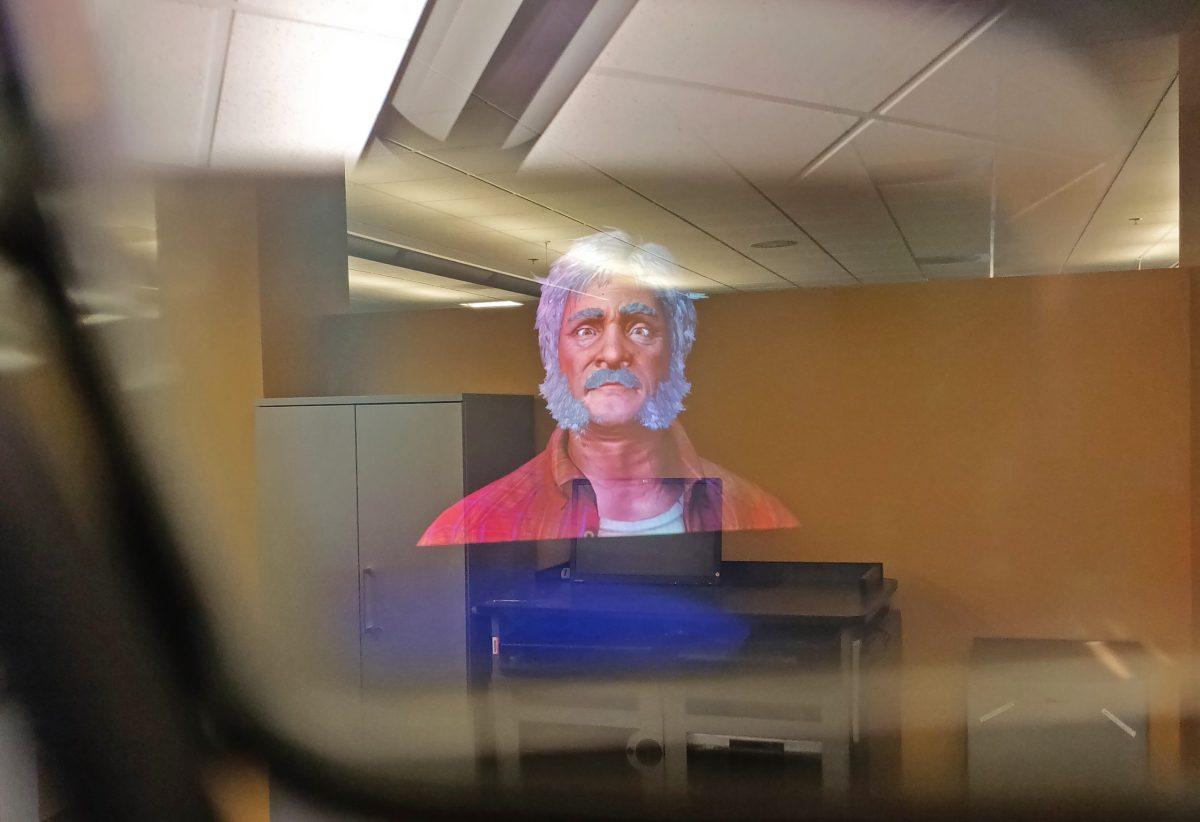

Joel Rizzo, a student programmer in the humans and synthetics lab, sits in front of a computer screen featuring an old man sitting on a hospital bed. Rizzo speaks to the virtual patient, gaining knowledge about the medical field through augmented reality technology, which UTD researchers hope can be used to train future doctors.

This virtual character is “Walter,” who was created by a team of university students alongside assistant professor of arts and technology Marjorie Zielke in the Center for Modeling and Simulation. Walter, who can also be interacted with using a Microsoft HoloLens, responds to the prospective doctor with his medical concerns. Students practice interacting with future patients by discerning Walter’s symptoms from verbal and nonverbal cues as well as his medical history and physical background such as diet and exercise.

Zielke said that the inspiration for the project came from colleagues at UT Southwestern.

“We’ve had a series of projects to help medical school students and related types of students learn how to communicate with patients,” Zielke said. “Our colleagues on this at UT Southwestern wanted a more realistic kind of environment that was not just sort of ‘pick the right answer’ but was more of a natural language process.”

Djahangir Zakhidov, a research scientist in Modeling and Simulation, modeled a mock interview of Walter using the HoloLens, an AR headset with built-in speakers .

“You can say something like, ‘Hi Walter.’ Or you can say, ‘What brings you here today?’” Zakhidov said. “And if you walk a little bit, he will track you with his face … he’ll keep looking at you.”

Rizzo, a software engineering graduate student, said that he sees this novel technology as a supplement to the current medical school training system, which relies on bringing actors who simulate the role of patients to examination rooms. Students then interview the mock patients to deduce their symptoms and are judged on both their professionality and their ability to diagnose the patient correctly.

“This is designed to fill the gap. This is for when you are a medical student, you want to practice this, but you don’t want to do it in the middle of one of the regular testing training sessions that they do,” Rizzo said. “That’s where this would really shine, because this thing, you can have it sitting in a room. It’s always there. It’s always on, always ready to go.”

The team’s research was funded with a $75,000 grant from the National Science Foundation and was previously funded by the Southwestern Medical Foundation and the NSF’s US Ignite high-speed network research program.

Zielke said that this project’s focus on communication is something that has worked very well.

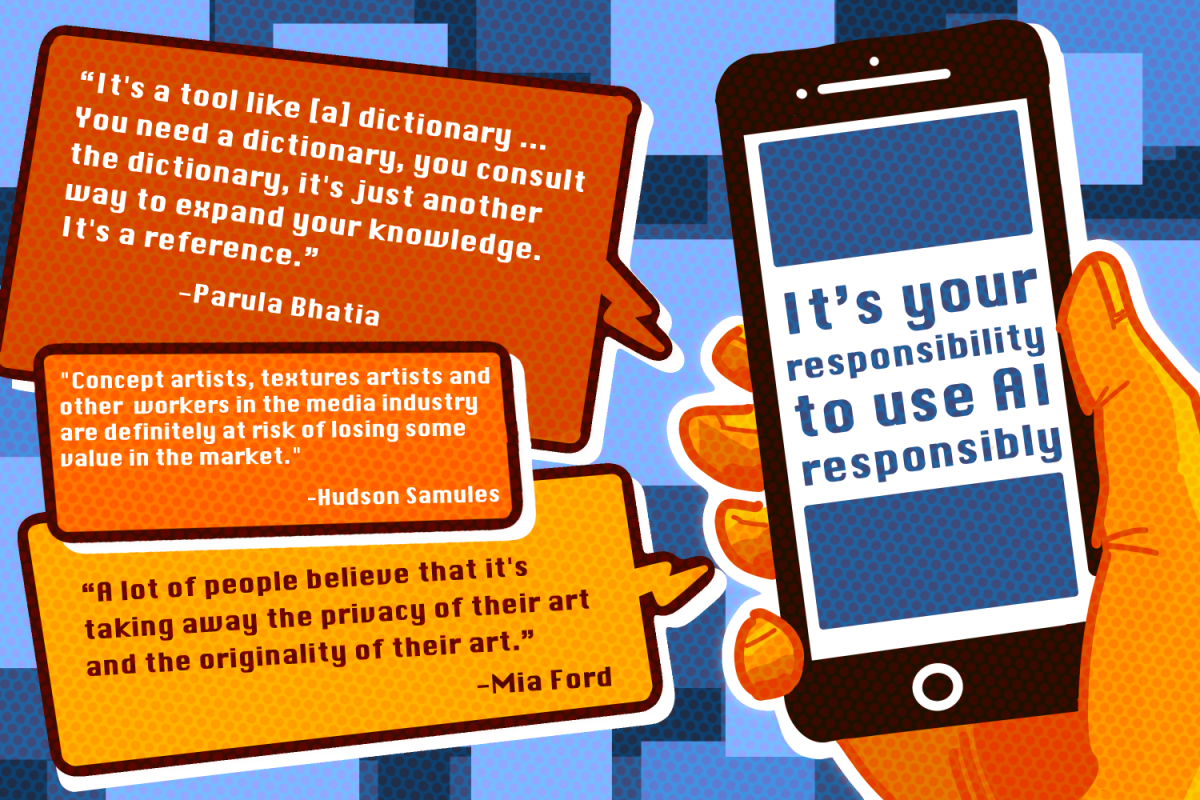

“NSF has this big initiative right now, which is the future of work. One of the major themes is as (artificial intelligence) becomes more available, what is the role of the human being versus machine?” He said. “Communication is one of the enduring traits of human beings. We read lots and lots of scientific reports… and these all have this ongoing theme that communication will be one of the things that remains specifically human.”

Walter is only the first in a line of many more. Zielke and her team said that they are planning to create multiple virtual reality scenarios with different people to produce a diverse collection of situations that prospective doctors will have to face.

“There are three other characters that we’re developing. One is a virtual professor, and then two virtual students, a man or woman. So that’s also about social learning and how you can learn from peers,” Zielke said. “Ultimately, there’s going to be several characters, and then we’re going to be doing some experiments as to when you practice an interview, do you prefer to get feedback from a peer or professor, or virtual professor or a real professor?”

The researchers were able to compile information about facial cues and body language into an AI from sample interview footage made at UT Southwestern, Rizzo said. Since they’re developing this AI in conjunction with UT Southwestern, Zielke said, the experiments will be held there over the next few years. There will be no cost involved.

“There’s all kinds of possibilities for the future. There are other levels of health care professionals, healthcare students, all the way down to people who are currently in high school who are going to be different types of healthcare workers,” Zielke said. “This theme of being able to communicate with patients or patients’ families is equally important to them too, so there’s all sorts of different educational levels that you could use this for.”